Evolving Threats and Regulations in Software Supply Chain Security

2023 is in the rearview mirror and 2024 is now well underway. I wanted to post my thoughts on some of the software supply chain trends we saw last year and how they will continue to shape...

Continue ReadingEU Cyber Resilience Act (CRA) Clears Penultimate Step

On December 3rd, the EU's new Cyber Resilience Act (CRA) got a big step closer to being adopted when the European Parliament and the EU Council reached an agreement on the legislation. It...

Continue ReadingThe Wretched State of OT Firmware Patching

This blog is a follow-up to our first post on the 2023 Microsoft Digital Defense Report where I described our collaboration with Microsoft on identified exploitable OT vulnerabilities.There...

Continue ReadingMicrosoft Digital Defense Report: Behind the Scenes Creating OT Vulnerabilities

Earlier this summer, aDolus collaborated with Microsoft on vulnerability analysis and contributed to their Microsoft Digital Defense Report 2023 (MDDR 2023). This report is a significant...

Continue ReadingAn Analysis of Generative AI: How to Be Confidently Wrong

The recent release of the National Cybersecurity Strategy document by the White House prompted me to test Microsoft's new Bing chat feature, which is powered by OpenAI's language model,...

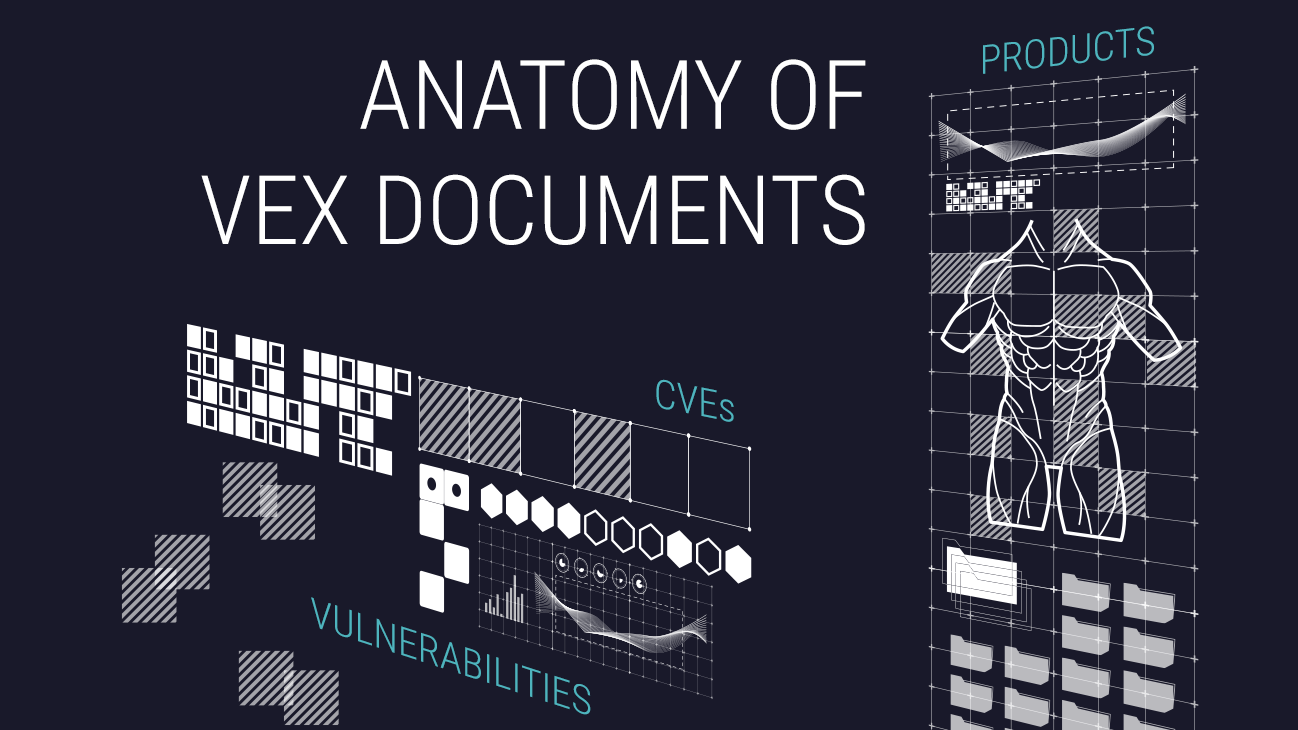

Continue ReadingS4x23 SBOM Challenge — Part 3: VEX Document Ingestion

Three weeks ago I reported on the first part of the S4x23 SBOM Challenge run by Idaho National Laboratory (INL), which focused on SBOM Creation. Last week I reported on the second part:...

Continue ReadingS4x23 SBOM Challenge — Part 2: SBOM Ingestion

Two weeks ago I reported on the first part of the SBOM Challenge at the S4x23 cybersecurity conference in Miami, Florida. The Day 1 goal was for each team to create an accurate SBOM for...

Continue ReadingThree Quick Takeaways from Biden’s National Cybersecurity Strategy

NOTE: We were going to publish our second blog of the S4x23 SBOM Challenge today. However, the new National Cybersecurity Strategy was released this morning, and we thought that...

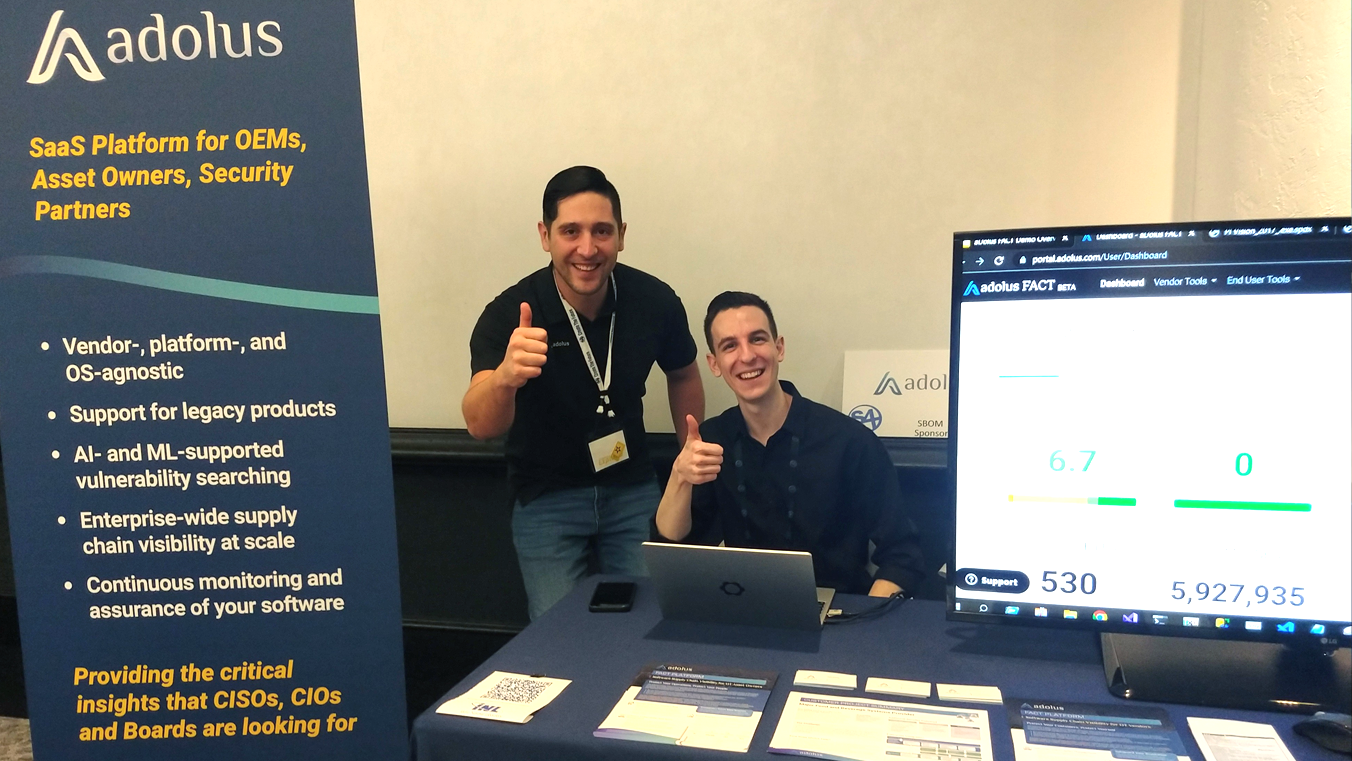

Continue ReadingS4x23 SBOM Challenge — Part 1

The aDolus Team has just returned from participating in the SBOM Challenge at the S4x23 cybersecurity conference in Miami, Florida. This blog is the first of a series reporting on what we...

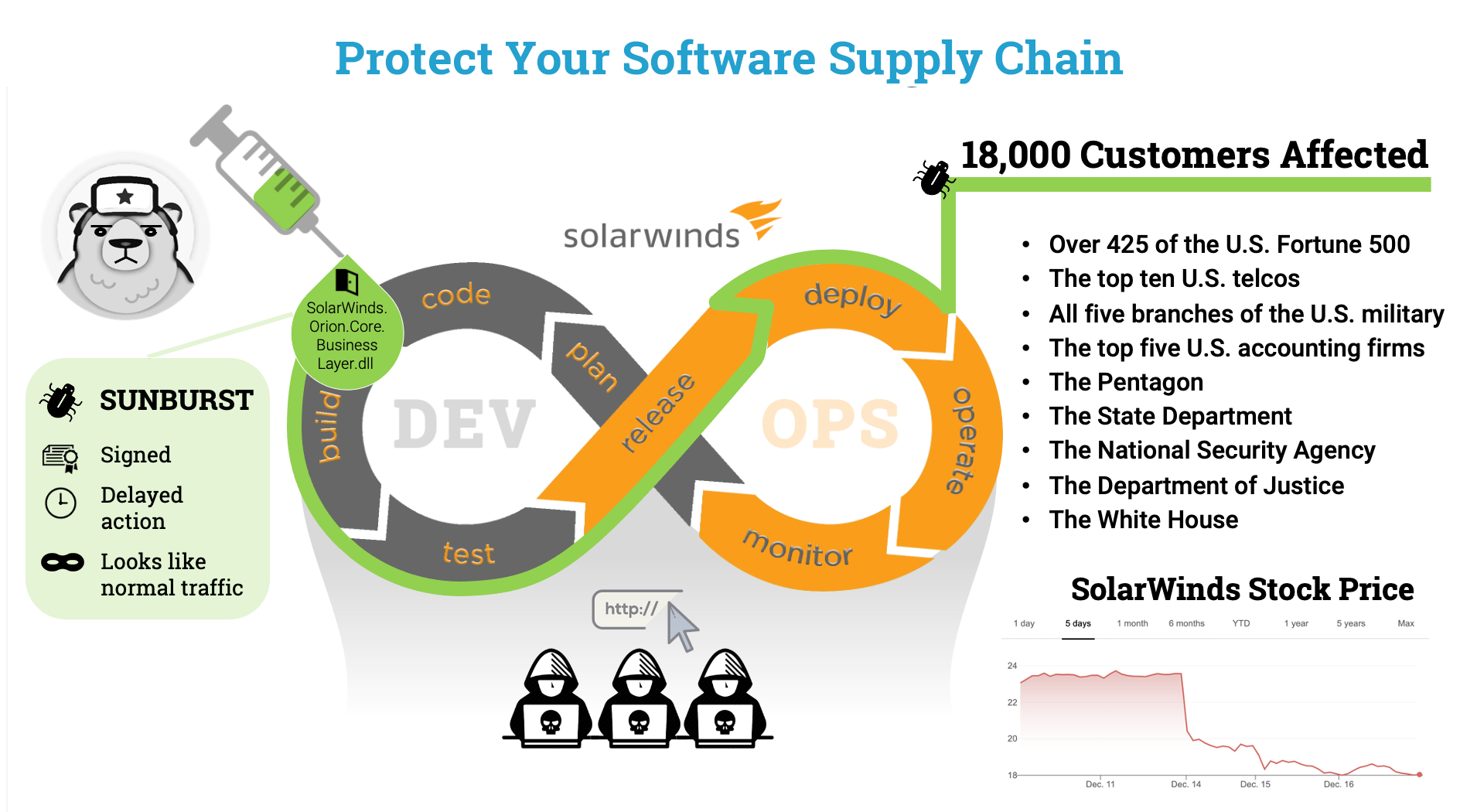

Continue ReadingA Flurry of Regulatory Action and the Need for SBOMs

Executive Order 14028 on Improving the Nation's Cybersecurity was issued in May of 2021 and provided a roadmap for a series of regulatory initiatives that government agencies (and anyone...

Continue ReadingStay up to date

Browse Posts

- February 2024

- December 2023

- October 2023

- April 2023

- March 2023

- February 2023

- October 2022

- April 2022

- February 2022

- December 2021

- November 2021

- August 2021

- July 2021

- June 2021

- May 2021

- February 2021

- January 2021

- December 2020

- September 2020

- August 2020

- July 2020

- May 2020

- April 2020

- January 2020

- October 2019

- September 2019

- November 2018

- September 2018

- May 2018

Browse by topics

- Supply Chain Management (16)

- SBOM (15)

- Vulnerability Tracking (15)

- #supplychainsecurity (10)

- Regulatory Requirements (10)

- VEX (8)

- EO14028 (6)

- ICS/IoT Upgrade Management (6)

- ICS (5)

- malware (5)

- vulnerability disclosure (5)

- 3rd Party Components (4)

- Partnership (4)

- Press-release (4)

- #S4 (3)

- Software Validation (3)

- hacking (3)

- industrial control system (3)

- Code Signing (2)

- Legislation (2)

- chain of trust (2)

- #nvbc2020 (1)

- DoD CMMC (1)

- Dragonfly (1)

- Havex (1)

- Log4Shell (1)

- Log4j (1)

- Trojan (1)

- USB (1)

- Uncategorized (1)

- energy (1)

- medical (1)

- password strength (1)

- pharmaceutical (1)

Sidebar